I have advanced skills in Python/Django, R, and PostgreSQL,

and I've implemented those skills on projects with users around the world.

I can build pipelines,

databases, and

web apps to meet a wide range of user needs.

More broadly, I can help researchers conceptualize new tools

and find practical and affordable ways to build them.

👇 Scroll down to see recent projects on which I've applied these skills 👇

2023

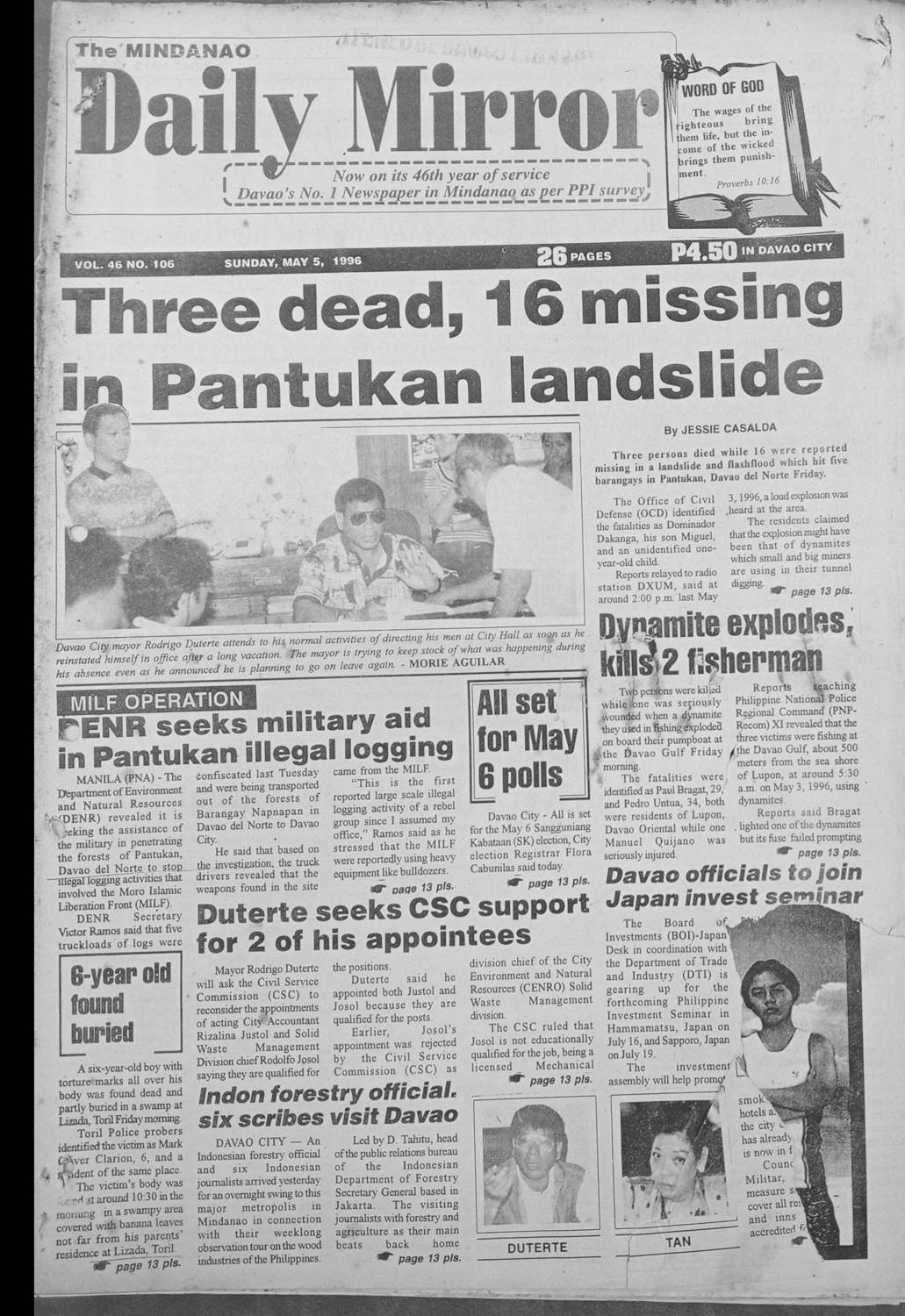

Davao Newspaper Library

Large-scale digitization of historical newspapers

Summary

In collaboration with partners in Davao City, The Davao Newspaper Library

allows browse and full text search of more than 300,000

pages of newspapers published since 1972 in Davao City, Philippines.

It is one of the largest fully-searchable historical collections in the

Philippines.

The project built using free or low-cost technologies and can serve

as a model for other research-driven cultural heritage

projects in under-resourced areas.

Details

The project was inspired by a simple problem: I needed access

to historical documents to do my PhD research, but there were

few large collections in Davao City that I would be able to access

and review in the six months I had for fieldwork.

To solve that problem, I worked with the Ateneo de Davao University

and the Mindanao Times to digitize large parts of their periodicals

collections. I provided the core technology, all of which

I wrote from scratch, and the Ateneo provided logistical support.

Together, we hired a half dozen research assistants who, using their

smart phones to save on equipment costs, created the raw images

we used in the library. I then OCR'd the images, stored the results

in a database, and built a simple web app and search engine to

query the database.

The Library is freely available for academic research. Please

email me to request access.

Technologies Used

Ubuntu

Python

PostgreSQL

Microsoft Azure

Standalone Outputs

Raw scans

Photos were taken using Research Assistants' mobile phone

cameras in one of the library's reading rooms. Photos

were taken without studio lighting, just natural and overhead fluorescent

lights.

We had some undesirable shadows in our initial

round of scanning, but we fixed this using 💡 table lamps 💡

Clean Scans

Using the returns from an OCR service, we cropped each

image, rotated it to the correct reading orientation,

and saved a 1500 by 1500 pixel black-and-white version to reduce file size.

This is the primary way users of the Library interact

with the scans.

Searchable PDFs

We also output a searchable PDF for each image, which users can programmatically assemble into issues and download.

2022

News Story Tracker

Using web scraping and string similarity to track news article distribution

Summary

The Granite State News Collaborative's story tracker uses web

scraping and string similarity algorithms to record when

specific news stories are republished by one of the Collaborative's

nearly two dozen partners.

This metric is crucial for helping the Collaborative understand

its reach and impact, and for helping allocate future reporting

resources among the Collaborative's partners.

Details

The project was request by the Collaborative's Director,

who needed specific statistics about how many stories the

Collaborative's partners had shared and reprinted during its

first few years in operation.

To solve that problem, I wrote a program in R

and Python that scraped all the partners' websites, scraped a master Google Drive

of Collaborative stories, then looked for matches between the

two sets of stories. The results were automatically updated

to a Google Spreadsheet.

Between 2020 and the end of 2022, the tracker found 4,343 reprints

across the partner websites.

Sample Output